Made with AI.

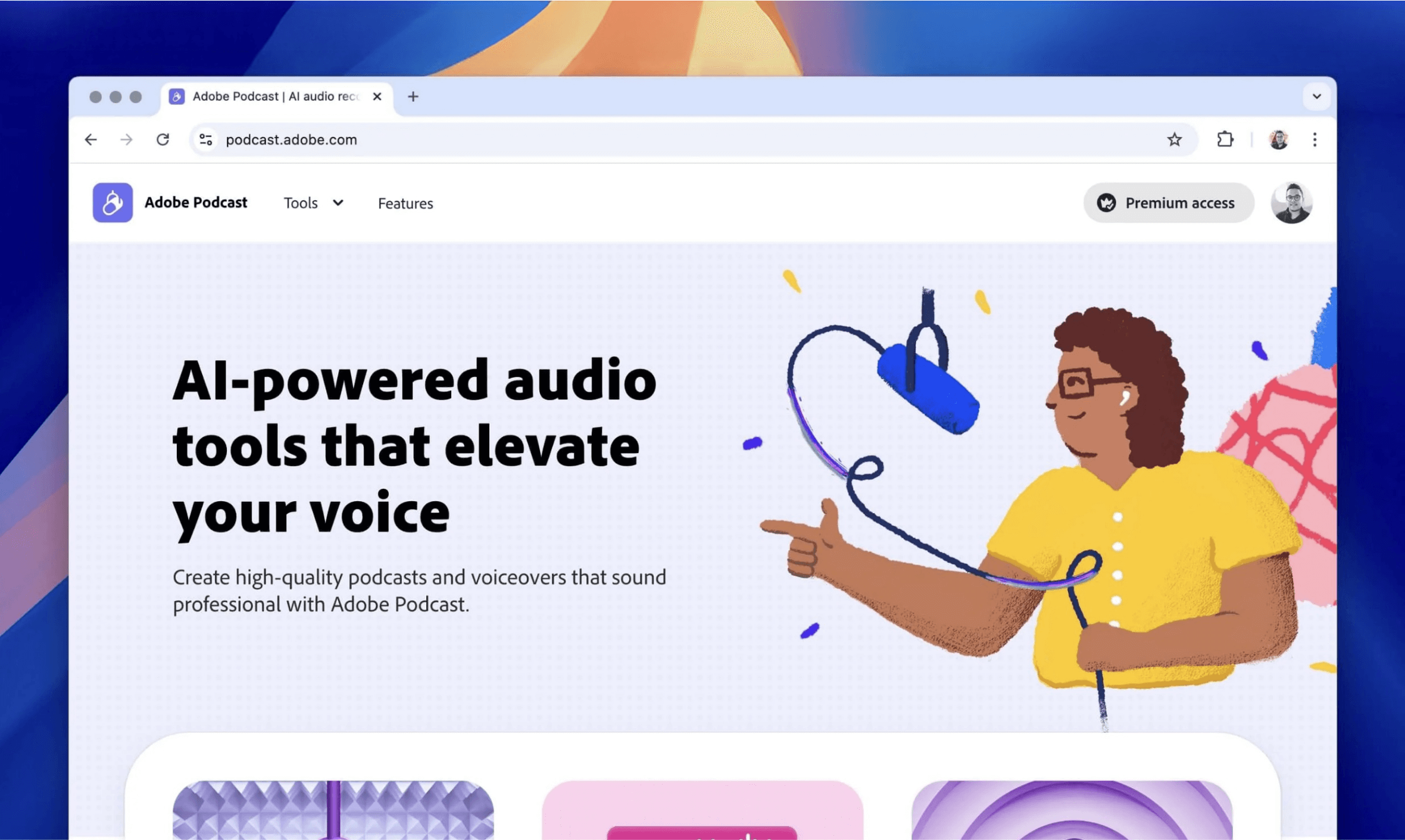

Adobe Podcast AI Audio

I work with research and engineering to turn audio models into experiences like Enhance Speech—improve clarity and reduce noise, Generate Soundtrack—score videos with commercially-safe generative music, and Studio, which enables multi-language speech-to-text, making it easier to edit audio like a document.

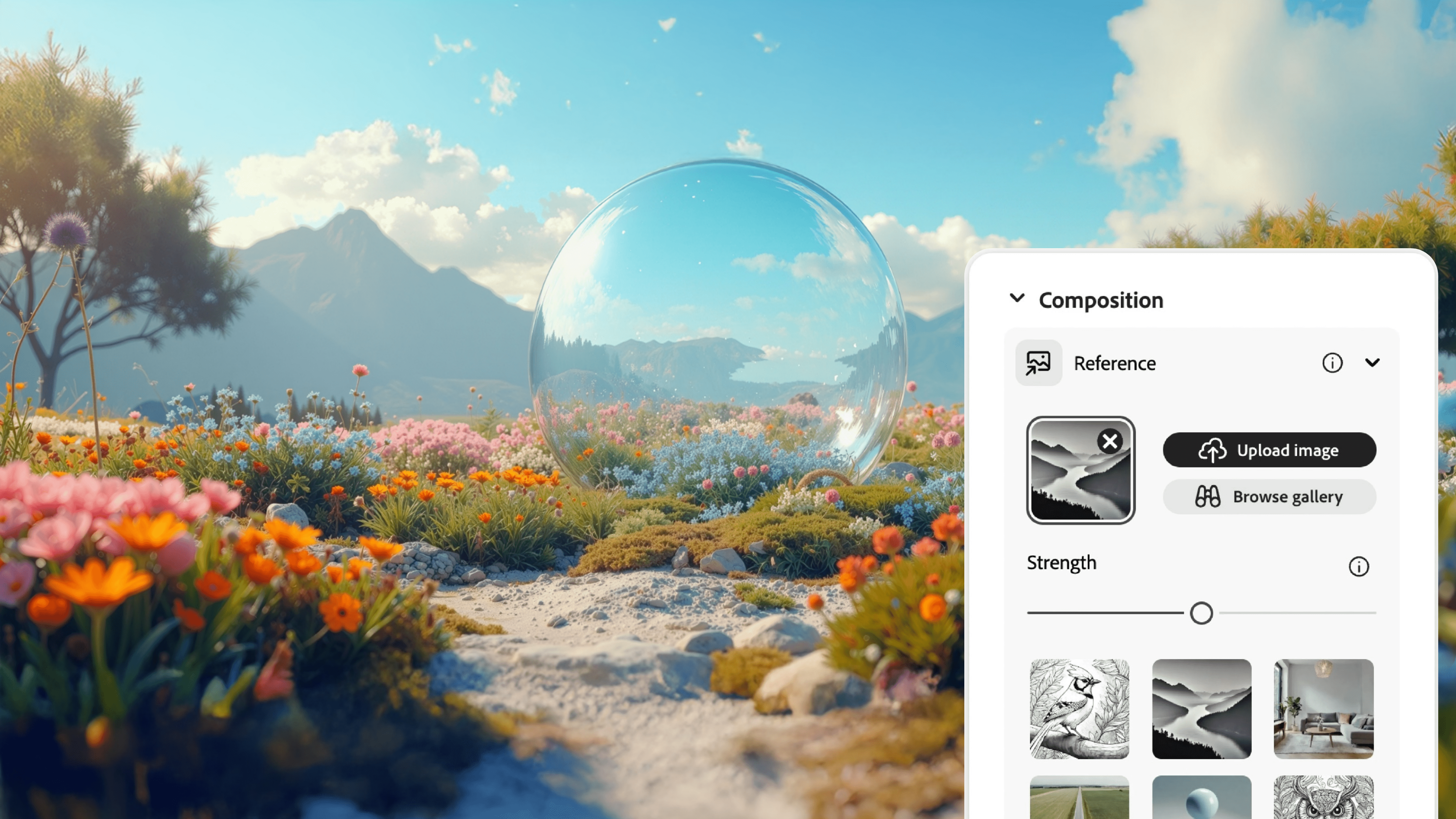

Adobe Firefly AI Imaging & Design

Previously, I worked on Adobe Firefly, Adobe's family of generative AI models for imaging. I partnered with research, engineering, and ethics teams on evals, refining model quality as well as built features like composition reference that helps users unlock the model's capabilities in intuitive ways to guide creation.

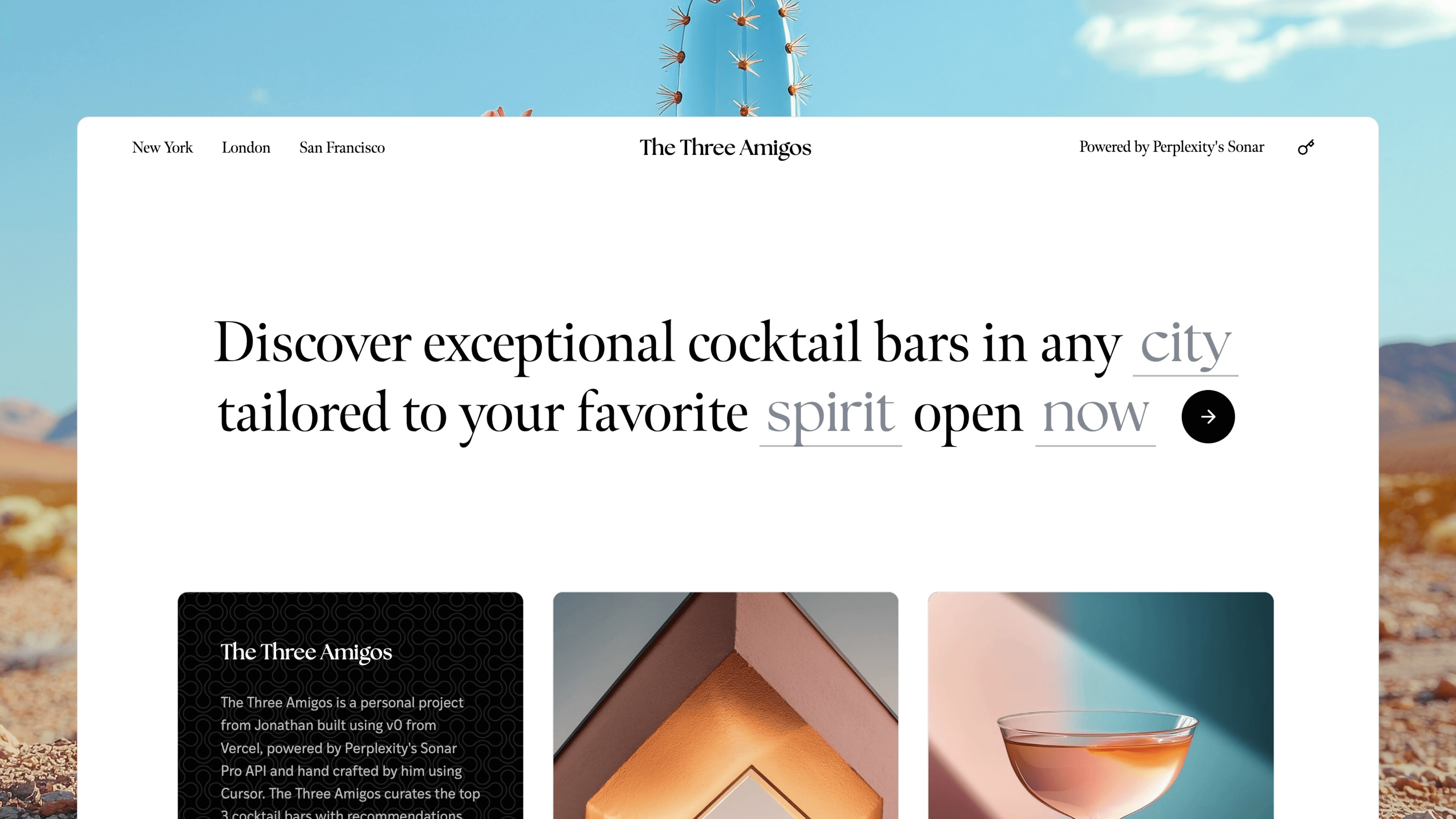

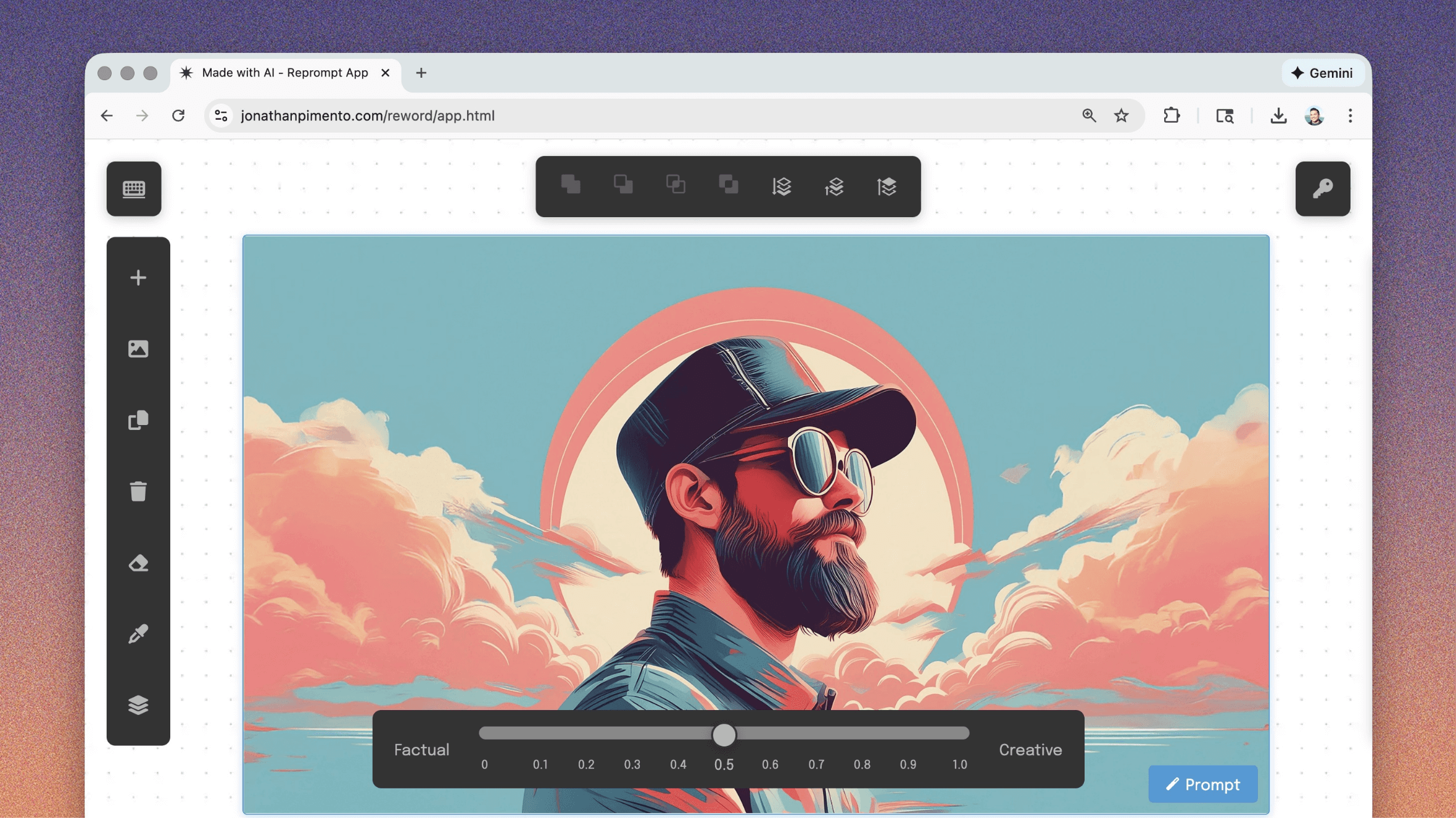

AI Projects Vibe Coding & Prototyping

Below is a mix of my work in this space—from building AI products at Adobe to personal tools and prototypes that explore LLMs, models, and vibe coding workflows. It reflects a journey that blends equal parts code, craft, and product—shaped by my engineering background, design taste, and product expertise.

Adobe